使用spark对网站的浏览情况进行统计分析,生成数据会输出到HDFS上。这边使用的数据源文件是nginx日志。tmp.log

ngnix的access.log的格式,摘抄部分日志

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

127.0.0.1 - - [05/Sep/2018:23:18:22 +0800] "GET /4DAnalog/clashreport/delete HTTP/1.1" 502 575 "-" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36"

127.0.0.1 - - [05/Sep/2018:23:18:22 +0800] "GET /favicon.ico HTTP/1.1" 502 575 "http://localhost:8080/4DAnalog/clashreport/delete" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36"

127.0.0.1 - - [05/Sep/2018:23:18:40 +0800] "GET /4DAnalog/clashreport/find HTTP/1.1" 502 575 "-" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36"

127.0.0.1 - - [05/Sep/2018:23:18:40 +0800] "GET /favicon.ico HTTP/1.1" 502 575 "http://localhost:8080/4DAnalog/clashreport/find" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36"

127.0.0.1 - - [05/Sep/2018:23:18:42 +0800] "GET /4DAnalog/clashreport/find HTTP/1.1" 502 575 "-" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36"

127.0.0.1 - - [05/Sep/2018:23:18:42 +0800] "GET /favicon.ico HTTP/1.1" 502 575 "http://localhost:8080/4DAnalog/clashreport/find" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36"

127.0.0.1 - - [05/Sep/2018:23:18:43 +0800] "GET /4DAnalog/clashreport/find HTTP/1.1" 502 575 "-" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36"

127.0.0.1 - - [05/Sep/2018:23:18:43 +0800] "GET /favicon.ico HTTP/1.1" 502 575 "http://localhost:8080/4DAnalog/clashreport/find" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36"

127.0.0.1 - - [05/Sep/2018:23:18:43 +0800] "GET /4DAnalog/clashreport/find HTTP/1.1" 502 575 "-" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36"

127.0.0.1 - - [05/Sep/2018:23:18:44 +0800] "GET /favicon.ico HTTP/1.1" 502 575 "http://localhost:8080/4DAnalog/clashreport/find" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36"

127.0.0.1 - - [05/Sep/2018:23:18:52 +0800] "GET /4DAnalog/clashreport/delete HTTP/1.1" 502 575 "-" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36"

127.0.0.1 - - [05/Sep/2018:23:18:53 +0800] "GET /favicon.ico HTTP/1.1" 502 575 "http://localhost:8080/4DAnalog/clashreport/delete" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36"

127.0.0.1 - - [05/Sep/2018:23:18:59 +0800] "GET /4DAnalog/chat/delete HTTP/1.1" 502 575 "-" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36"

127.0.0.1 - - [05/Sep/2018:23:18:59 +0800] "GET /favicon.ico HTTP/1.1" 502 575 "http://localhost:8080/4DAnalog/chat/delete" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36"

|

前期准备

需要提前准备好tmp.log上传到hdfs文件系统上

hdfs dfs -put ~/tmp.log /urlcount/

环境搭建及代码编写

1.创建maven项目

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

| <?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.zonegood</groupId>

<artifactId>hellospark</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<encoding>UTF-8</encoding>

<scala.version>2.10.6</scala.version>

<scala.compat.version>2.10</scala.compat.version>

</properties>

<dependencies>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.10</artifactId>

<version>1.5.2</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.10</artifactId>

<version>1.5.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.6.2</version>

</dependency>

</dependencies>

<build>

<sourceDirectory>src/main/scala</sourceDirectory>

<testSourceDirectory>src/test/scala</testSourceDirectory>

<plugins>

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.2.0</version>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

<configuration>

<args>

<arg>-make:transitive</arg>

<arg>-dependencyfile</arg>

<arg>${project.build.directory}/.scala_dependencies</arg>

</args>

</configuration>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.18.1</version>

<configuration>

<useFile>false</useFile>

<disableXmlReport>true</disableXmlReport>

<includes>

<include>**/*Test.*</include>

<include>**/*Suite.*</include>

</includes>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.3</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<filters>

<filter>

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

<transformers>

<transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<mainClass>com.zomegood.hellospark.WordCount</mainClass>

</transformer>

</transformers>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

|

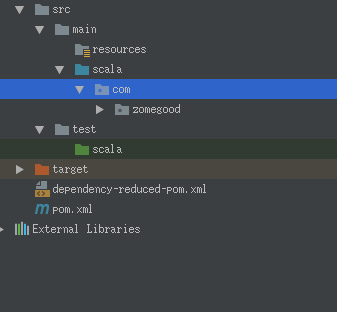

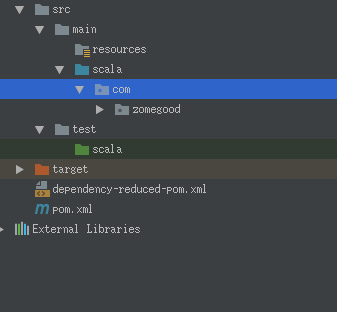

如果没有src/main/scala目录,需要手动创建

2.新建伴生对象com.zomegood.UrlCount.Main.scala

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| package com.zomegood.UrlCount

import org.apache.spark.{SparkConf, SparkContext}

object Main {

def main(args : Array[String]) : Unit = {

val conf = new SparkConf().setAppName("UrlCount")

val sc = new SparkContext(conf)

var rdd1 = sc.textFile(args(0)).map(_.split(" ")).map(arr => (arr(6),1));

rdd1.reduceByKey(_+_).saveAsTextFile(args(1));

sc.stop()

}

}

|

3.使用maven打jar包

运行

mvn clean package

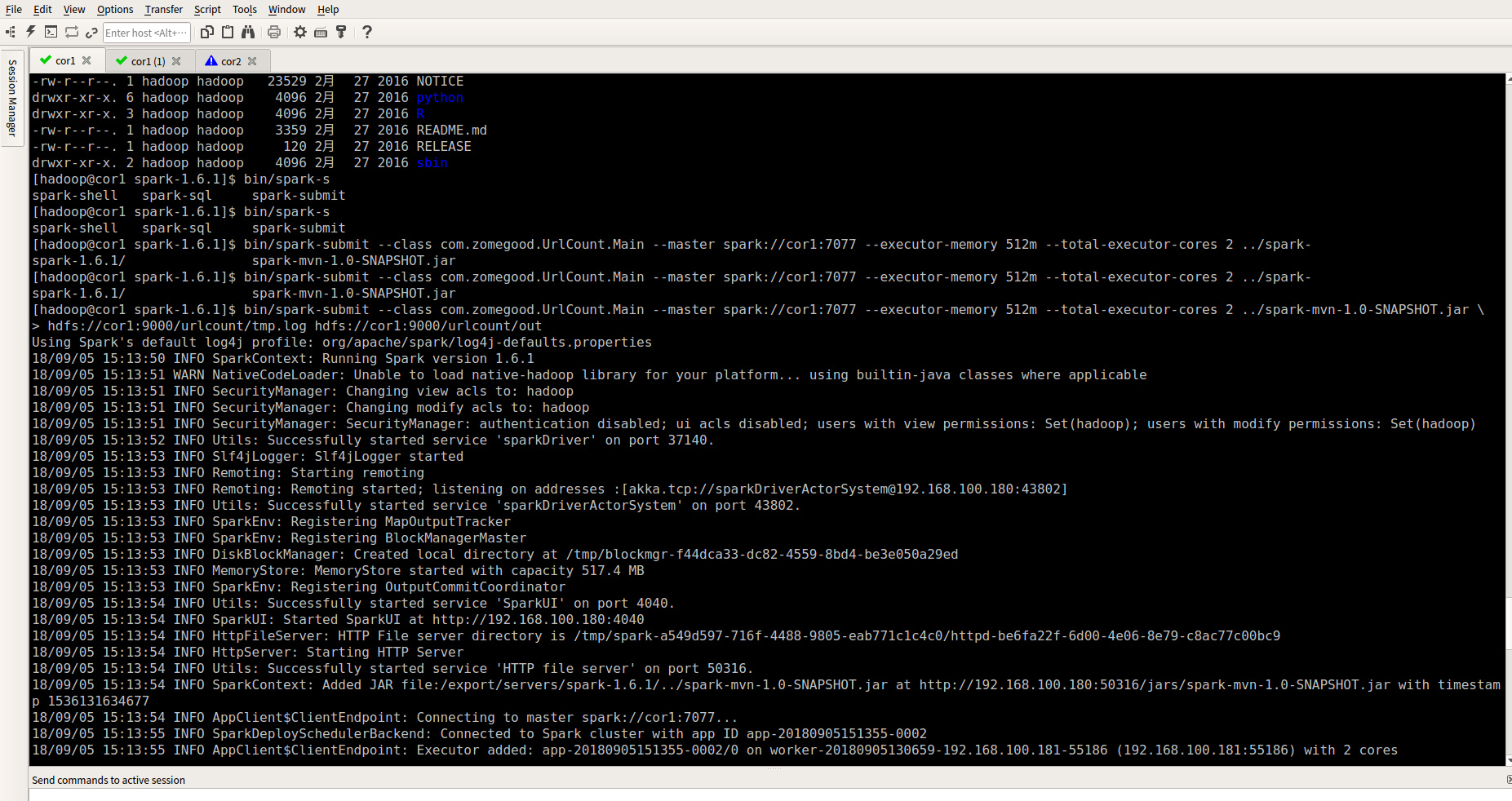

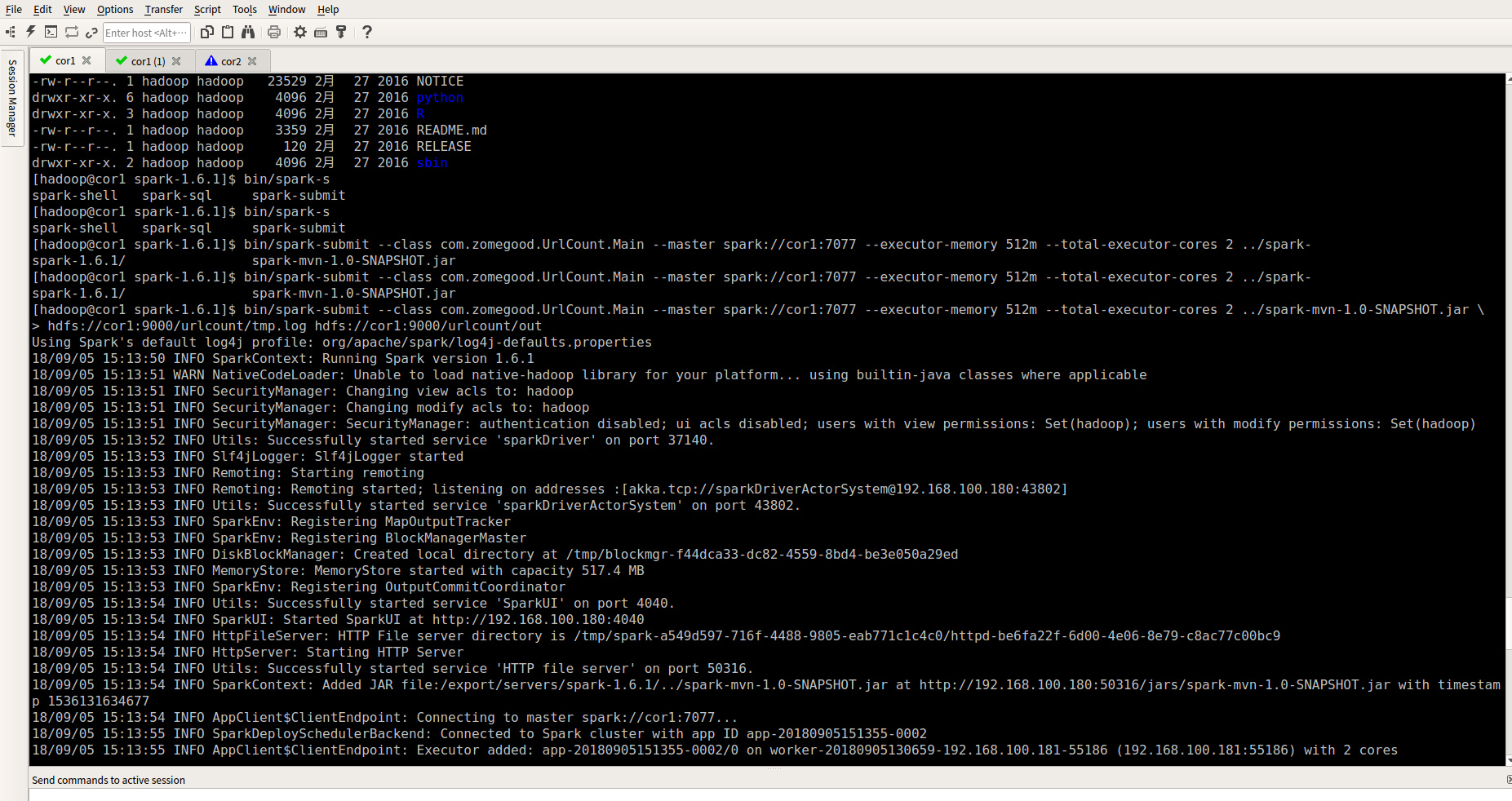

以集群方式运行

bin/spark-submit --class com.zomegood.UrlCount.Main --master spark://cor1:7077 --executor-memory 512m --total-executor-cores 2 ../spark-mvn-1.0-SNAPSHOT.jar hdfs://cor1:9000/urlcount/tmp.log hdfs://cor1:9000/urlcount/out

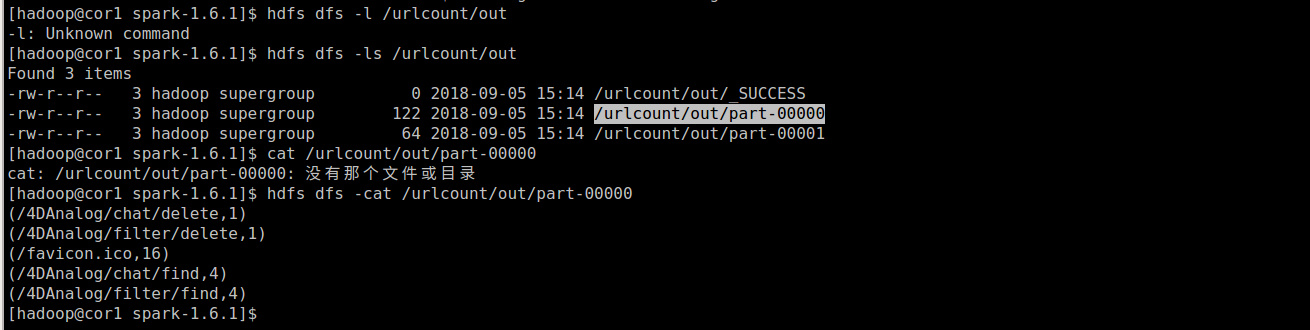

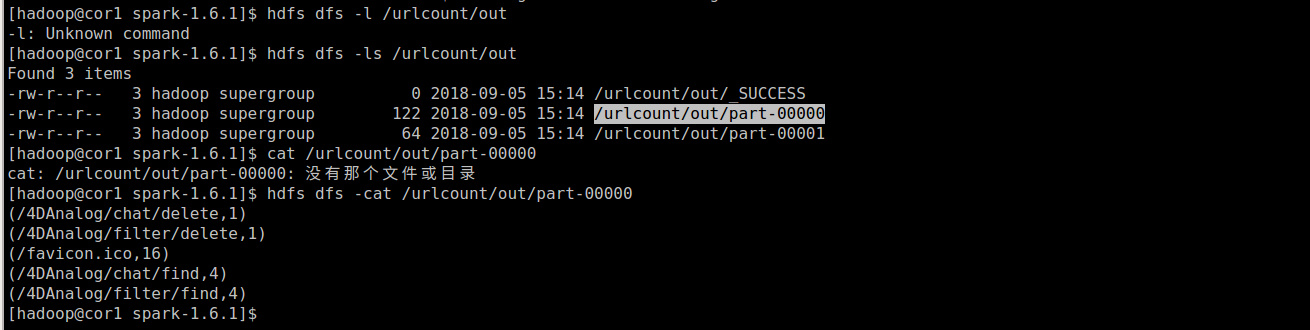

使用saveAsTextFile运行结果存到hdfs上